Big Data

Conventional database systems with respect to Big Data arise owing to the large volume of both structured and unstructured data,

data complexities, fast movement speed and inappropriate structure of the database architectures.

In its endeavor to keep pace with ever changing technologies, Intersoft has developed core competency in Big Data platform thus,

enabling it to deliver robust and quality solutions across different domains such as Healthcare, Cyber security etc.

WE HAVE THE FOLLOWING MATURED AND EFFICIENT BIG DATA PRACTICES IN PLACE TO PROVIDE EFFECTIVE AND EFFICIENT BIG DATA SOLUTIONS :

- Advisory services

- Implementation services

- Managing services

OUR BIG DATA EXPERTISE INCLUDES

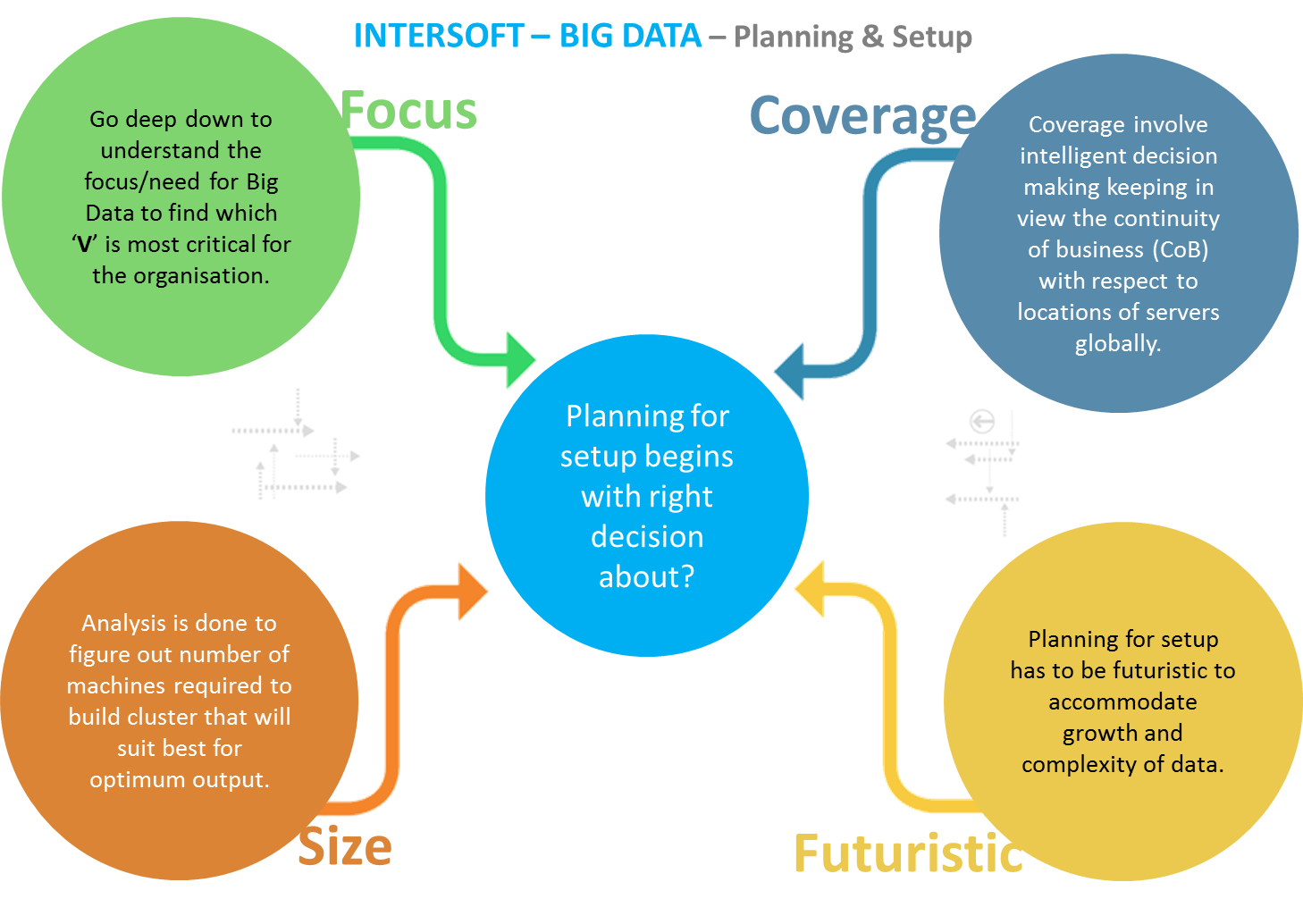

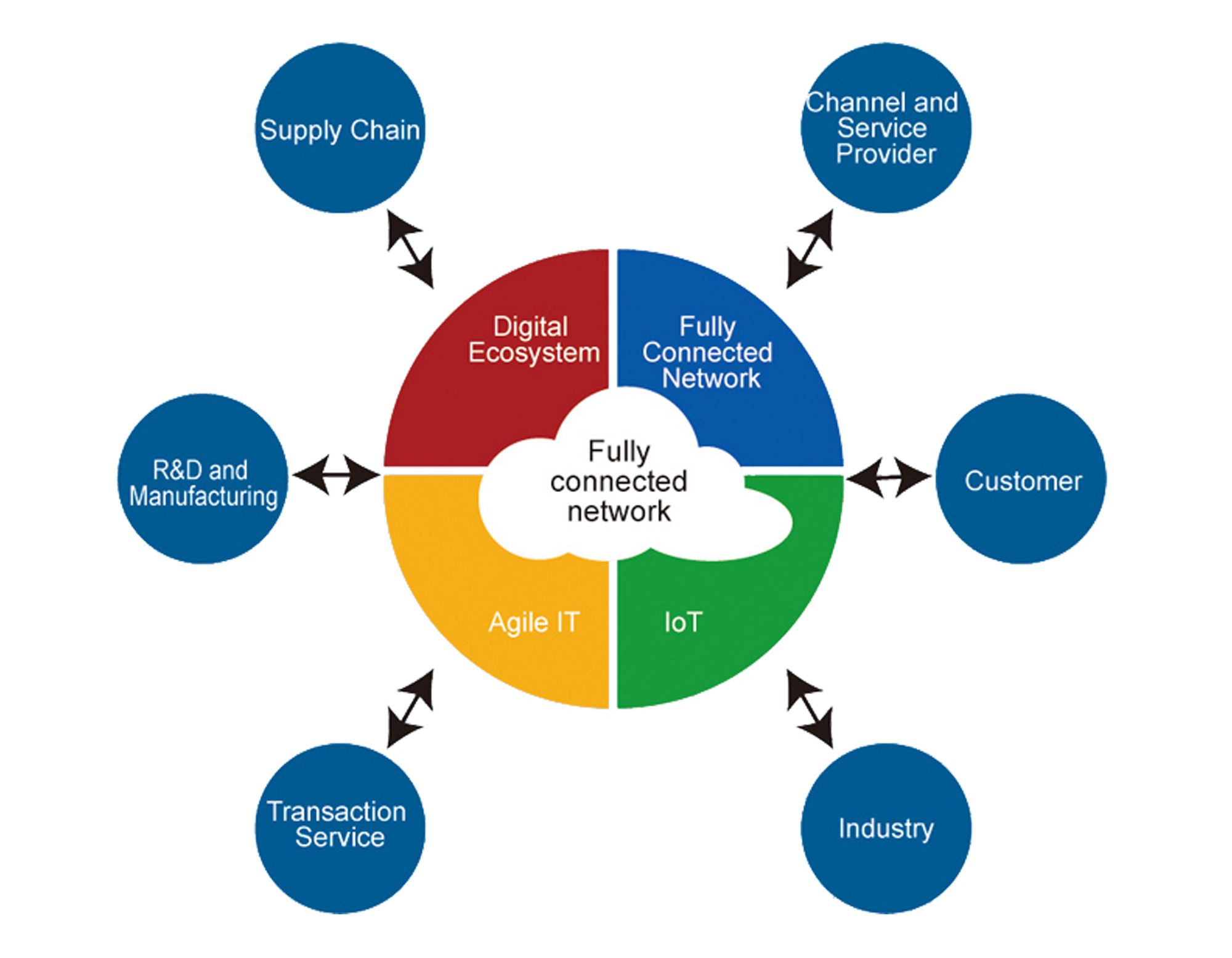

- Architecture/Framework design

- Hardware sizing

- Cluster setup and management.

- Processing of Structured and Unstructured databases

- Distributed computing and storage using Hadoop

- Data warehousing

- Data Mining

OUR SERVICES INCLUDES

Big Data Strategy And Business Case Formulation

Enterprise Big Data Roadmap

Jumpstart And Accelerate Big Data Initiatives

Prototyping And Proof Of Concepts

Environment Setup And Configuration

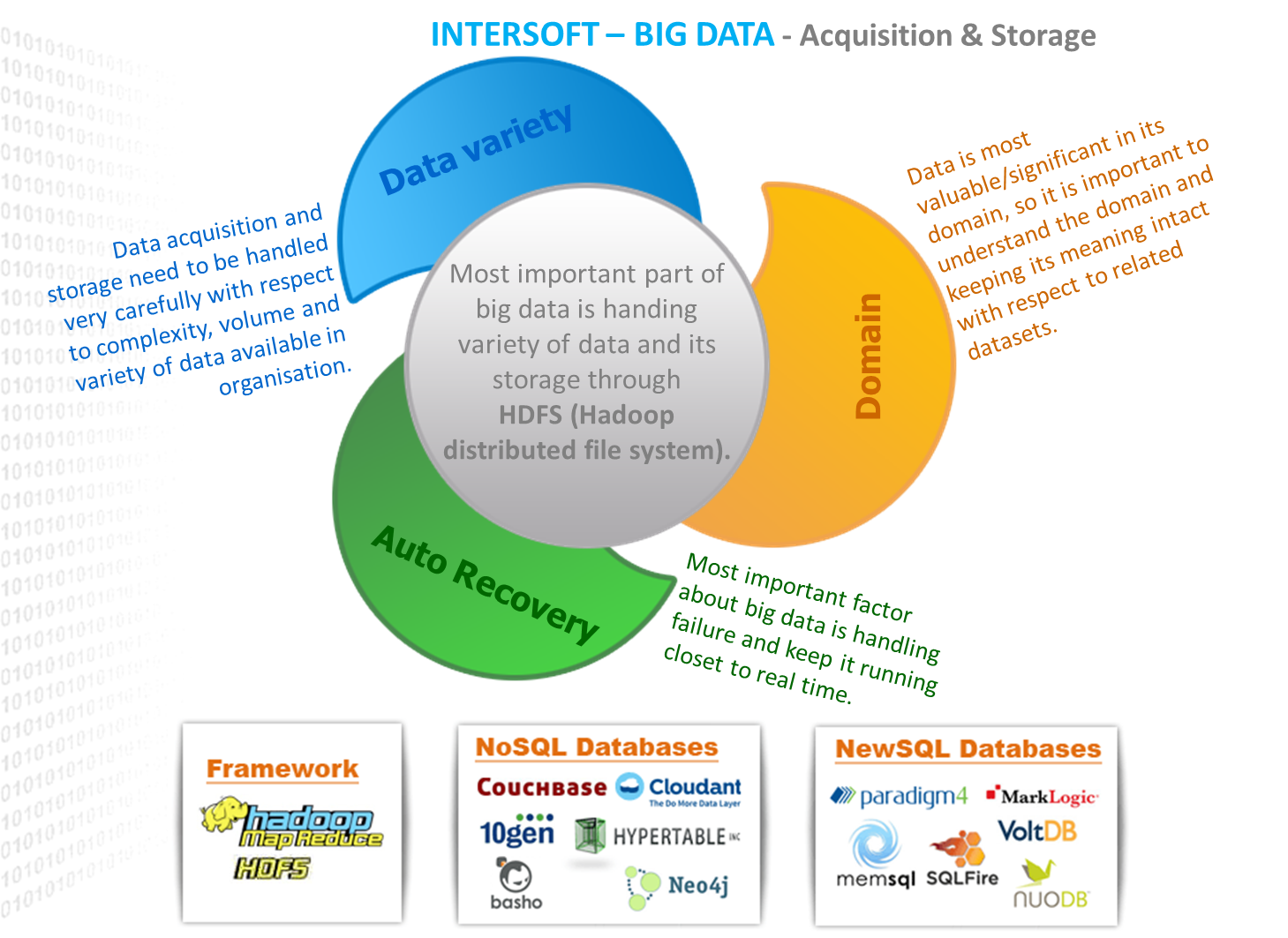

Data Acquisition And Marshaling

Data Management

System Updates And Maintenance

Data Analytics And Reporting

Data Quality, Governance And Maturity

METHODOLOGY

- Big Data Experience : Hadoop (HDFS)—Horton Works, Cloudera

- File Transfer utilities Experience : Flume, Sqoop

- NoSql Database Experience: HBase, Solr

- Programming languages/Algorithms Experience : Java, Python, MapReduce, Mahout

- Query languages Experience : Hive, HQL, Pig and Impala